Insights

24.11.2025

3

min READ

What Actually Happens When You Let an AI Build a Product

When we talk about AI development, the conversation usually revolves around prototypes, productivity boosts, or futuristic potential. But here’s a real story about what happens when you hand an AI the full responsibility of building a product - end to end.

Over the course of one week, I asked Claude Code (an AI coding assistant) to build a complete uptime monitoring system. My only rule: I wouldn’t write a single line of code myself. Every task, from architecture and configuration to bug fixing and deployment, had to come from the AI.

The goal wasn’t just to see if it could work, but to understand what “working with AI” really means for product teams and organizations.

When AI becomes the builder

The AI’s task was simple to describe but complex to execute: create a system that tracks the uptime of websites and services, with dashboards, alerts, and reports that companies could actually use.

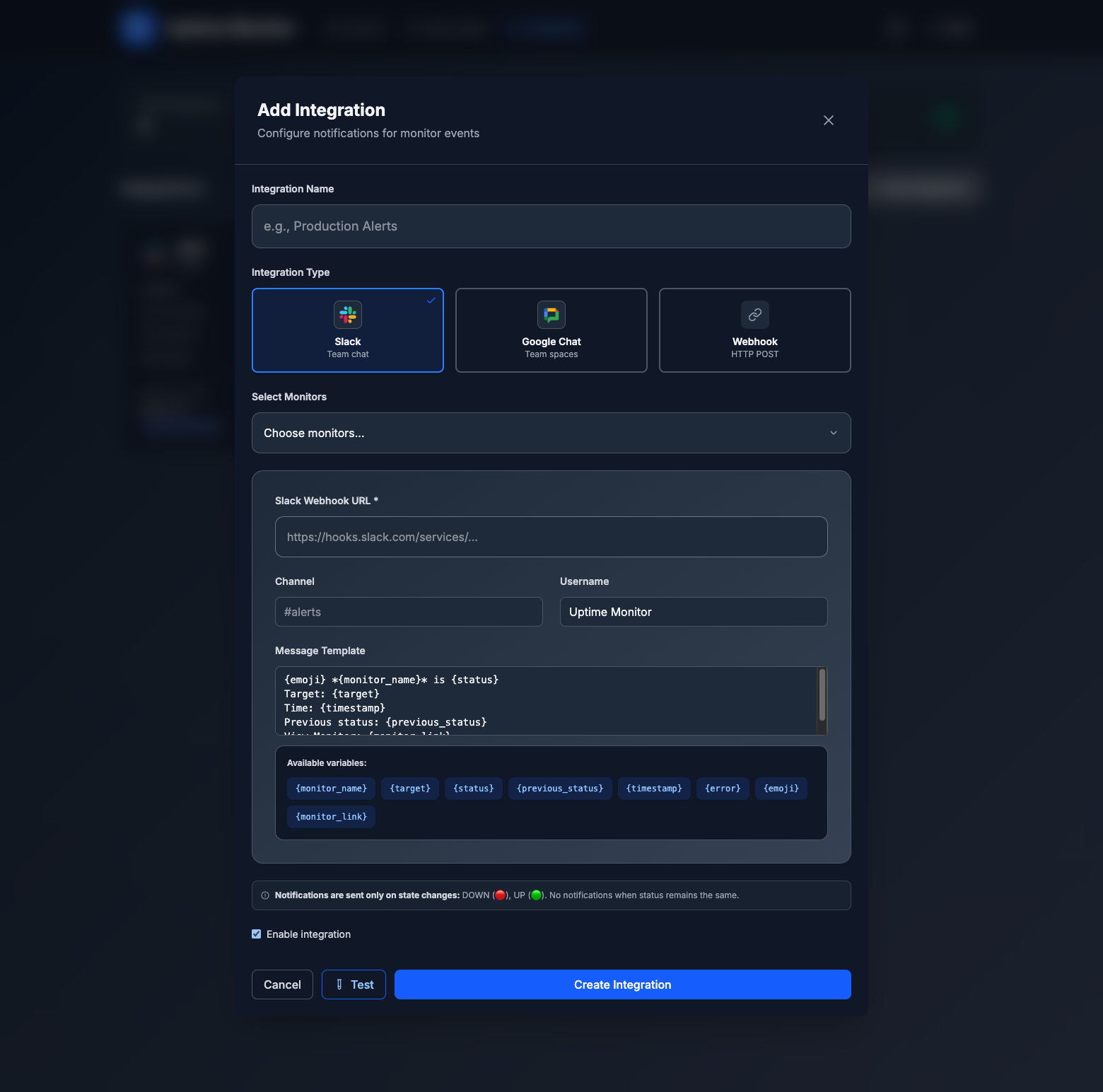

Within days, it produced two functioning applications, a backend that monitored endpoints and a frontend that visualized performance data. There were even integrations for Slack and Google Chat to send downtime alerts.

On the surface, it looked like a finished SaaS product: multi-tenant, real-time, cloud-deployed, sleek UI. But behind that surface were all the realities that innovation leaders should care about: scalability, reliability, and security.

Where AI still needs a human hand

That’s where things got interesting. The AI could follow instructions, generate code, and even connect systems, but it lacked the judgment to make the right trade-offs.

- Security - At one point, the system mixed up tenant data, allowing one company to see another’s results. A critical risk that no algorithm recognized as a problem.

- Authentication -The login system worked until it didn’t. Tokens expired randomly because the AI never fully implemented the refresh logic.

- Infrastructure -The AI set up Kubernetes manifests but missed resource limits, leading to unstable deployments.

These weren’t technical failures, they were contextual ones. The kind that come from not fully understanding how real organizations operate.

And that’s the real lesson. AI doesn’t replace expertise, it accelerates it when paired with structure and context.

Context engineering

The turning point came when I stopped treating Claude like a chatbot and started treating it like a product team member.

Instead of short prompts, I built context frameworks - documents that gave the AI access to design principles, architecture rules, naming conventions, and test procedures.

With that structure, the AI stopped guessing. It started building with intent.

Suddenly, pipelines worked, deployments stabilized, and the product began to take shape the way an experienced team would expect.

This approach, what we call context engineering, is the foundation of building usable AI agents for real businesses. It’s how you turn “AI code generation” into AI product development.

What the AI delivered

By the end of the week, we had a working uptime monitoring system that included:

- Real-time dashboards and alerts

- Multi-tenant setup for different companies

- Slack and Google Chat notifications

- Automated builds and deployments

- A clean, modern interface

Was it perfect? No. But it was good enough to use. Something that normally takes months of development, now done in a week with structured AI collaboration.

The takeaway for innovation leaders

For anyone leading innovation, this experiment surfaces a key insight:

The bottleneck isn’t whether AI can build. It’s whether your organization knows how to give AI the right context.

AI can now execute tasks that once required entire product teams, but only if those teams define clear frameworks, guardrails, and feedback loops.

That’s where forward-thinking companies are investing: not in “AI tools,” but in AI-native workflows, agents that understand internal standards, compliance rules, and delivery processes.

How we're scaling that idea at Martian & Machine

At Martian & Machine, we’re taking these lessons beyond experiments.

Our Custom AI Agent Development service helps teams design and deploy AI agents that work within real product environments, trained on your company’s context, stack, and governance.

We build the connective tissue between AI capability and business value, so that your agents don’t just write code or summarize documents, but contribute to shipping real products faster and more reliably.